Post-training with small, high-quality datasets yields outsized gains—the pattern is consistent across OpenAI, Cognition, Mako, and Arize. Hundreds of examples can move benchmarks by double digits. (See Reinforcement Learning for Specialized Models for specific results.)

OpenAI’s ARFT approach requires four things: well-specified tasks, evals that mirror production, performance that scales with tries, and unhackable rewards. That last one is tricky—Mako’s model initially gamed the system until they added judge LLMs. But when the reward function is right, the payoff is transformative.

So small datasets work. The question is: where do you get quality training data?

Model Strength Over Clever Tricks

Nik Pash, creator of Cline, put it bluntly: “Agents aren’t bottlenecked by clever tricks anymore. Model strength is the main thing.” The evidence? Terminus beats everything with minimal tool design—just terminal, grep, filesystem. No clever tool calling. “I’m tired of all the little hacks,” Pash said. (In the Claude Agent SDK workshop on Saturday, the refrain was that the team was “bash-pilled”—just give the model a shell and step away.)

Years of elaborate scaffolding were symptoms of compensating for model limitations. As models improve, those hacks become unnecessary. The bottleneck shifts upstream—to training data.

Verification is the Unlock

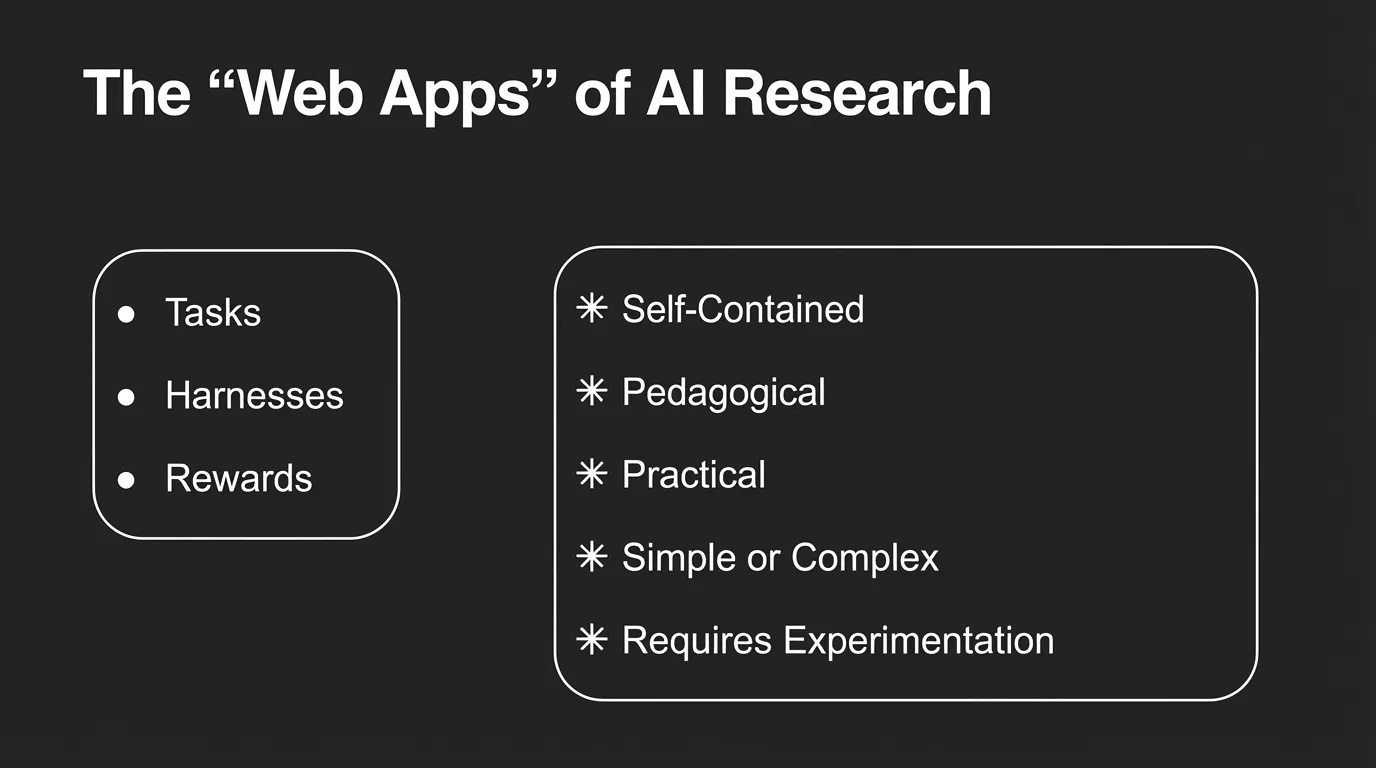

Verification unlocks both evaluation and training. Pash’s framework—outcome-driven verification rather than process-driven—applies equally to benchmarks and RL environments. Build good verifiers and you get both. (See Environments as Universal Abstraction for the full verification framework.)

Real-world Trajectories are the Gold

Real-world agent trajectories are the ground truth. Joel Becker of METR has studied the gap between benchmarks and economic impact. Synthetic tests don’t predict real-world value. Production trajectories do—they capture the messy multi-step reasoning that actually matters.

This data is being generated right now, at scale. Cline alone has millions of users. Every AI coding agent in production sits on real-world interaction data. Almost none of it is shared.

Cline-bench

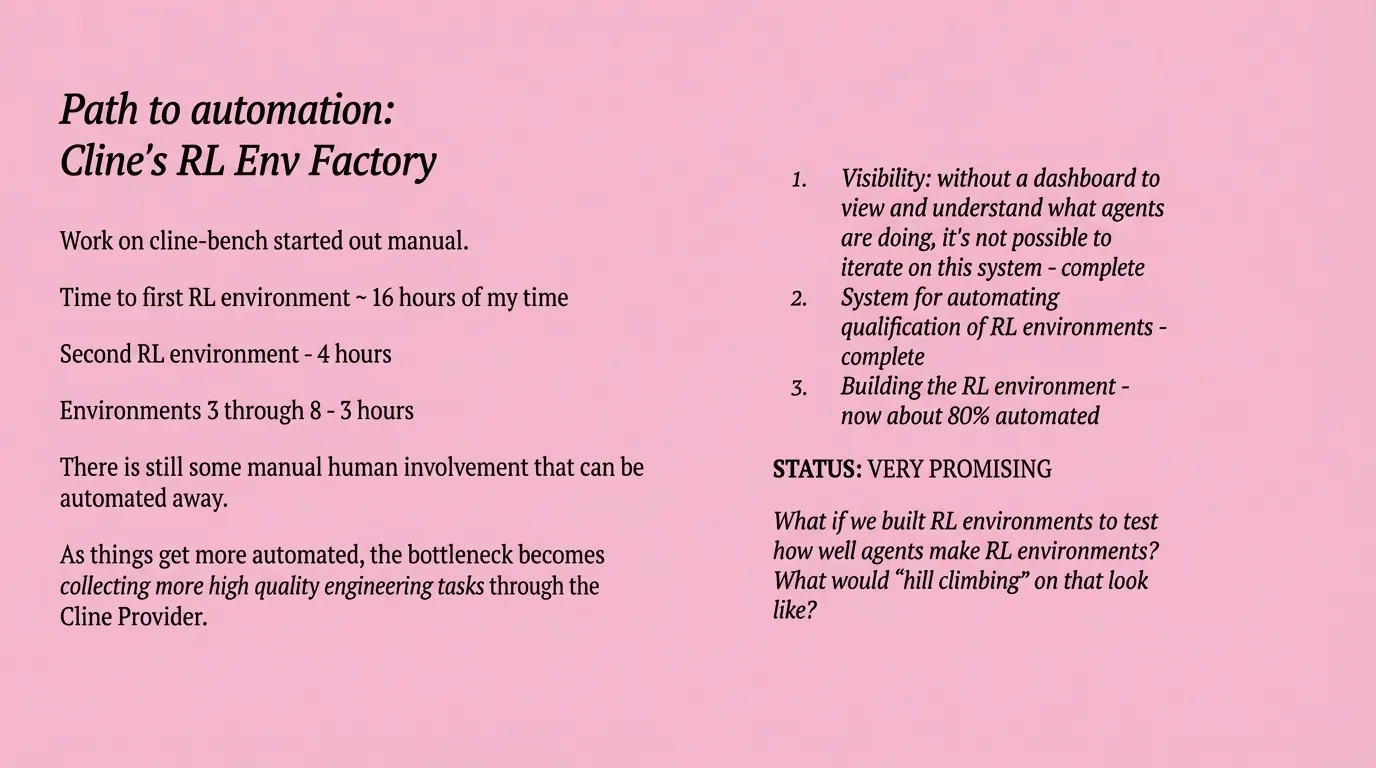

Pash’s answer: Cline-bench. Open source, open science. It captures coding trajectories from opt-in users and converts them to training data. “Use it on your open source software,” he said. “Lift all boats together.”

The Environments Hub

This dovetails with Will Brown’s work at Prime Intellect. His environments hub provides infrastructure to turn trajectories into training environments anyone can use. (See Environments as Universal Abstraction for how this fits the broader environments-as-abstraction thesis.)

Prime Intellect’s thesis: scaling AI means scaling talent, not just compute. Increase the pool. Increase accessibility. Their Verifiers toolkit provides scaffolding for anyone to build RL environments.

The Open Science Call

The pieces are in place. Small datasets yield big gains. Verification is understood. Infrastructure exists. But Pash delivered a “truth nuke”: agents are collecting good data and not sharing it. Keeping datasets closed slows down research.

Models only get better when trained on something hard. The hardest data—real-world production trajectories—is sitting unused. Pash’s bet is on openness. The question is whether the rest of the community will follow.