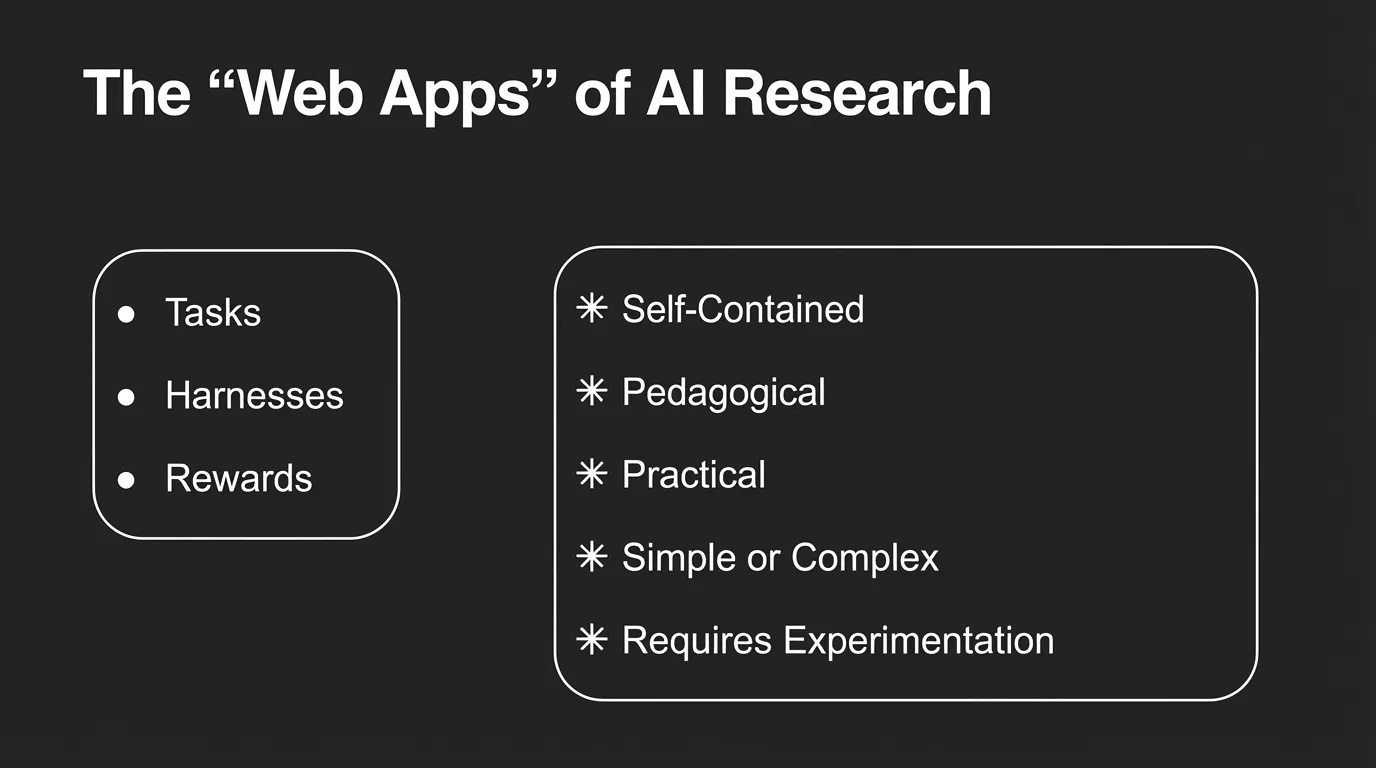

A fundamental architectural insight emerged across multiple sessions: environments have become the universal unit of abstraction for AI engineering. As Will Brown, Research Lead at Prime Intellect, put it: “Environments are the webapps of research.” This is a clarifying shift in how we think about training, evaluation, deployment, and iteration of AI systems.

Eno Reyes, CTO of Factory AI, and Nik Pash, creator of Cline, independently arrived at the same conclusion: a benchmark is an environment, a starting state, and a verifier. RL environments follow the same pattern. “The only real difference is how the reward is used,” Pash explained. “One is measure, one is improve.” Benchmarks, harnesses, verification systems, RL training grounds—all instances of the task/harness/rewards pattern.

Verification-Driven Development: The Tea Kettle Example

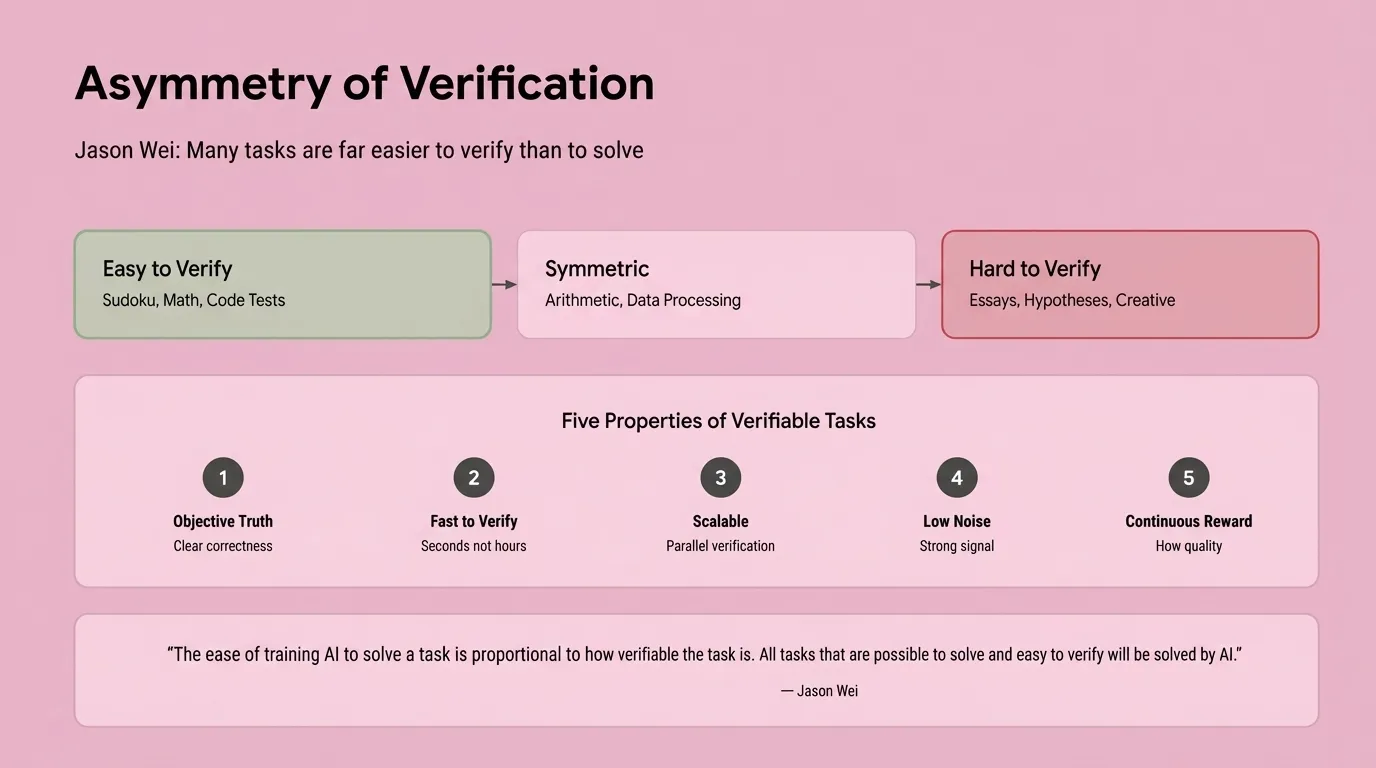

We have environments and we need verification. Pash’s “tea kettle” example: Task is boil water. A good verifier asks one question: Is the kettle whistling? Ignore all the other surface level properties. Bad verifiers ask process questions: Is the burner set to high? Has five minutes elapsed? Is the kettle on the front left burner? Did you filter the water? Outcome-driven—doesn’t care how you got there, though those traces can be useful to RL the agentic system itself.

Reyes: “Many tasks are much easier to verify than to solve.” Verifiability is the key constraint on AI capability. “The ability to solve is proportional to how verifiable it is for the AI to solve.” Focus engineering efforts on creating rigorous verification boundaries, not micromanaging the process.

The Environment Hub: Scaling the Abstraction

Brown’s work at Prime Intellect demonstrates how this abstraction scales. His team built the “environments hub” for creating, sharing, and running RL training and evaluations. The architecture treats environments as first-class entities with three components: task definition, harness for execution, and stream of rewards. This same pattern appears everywhere in modern AI engineering.

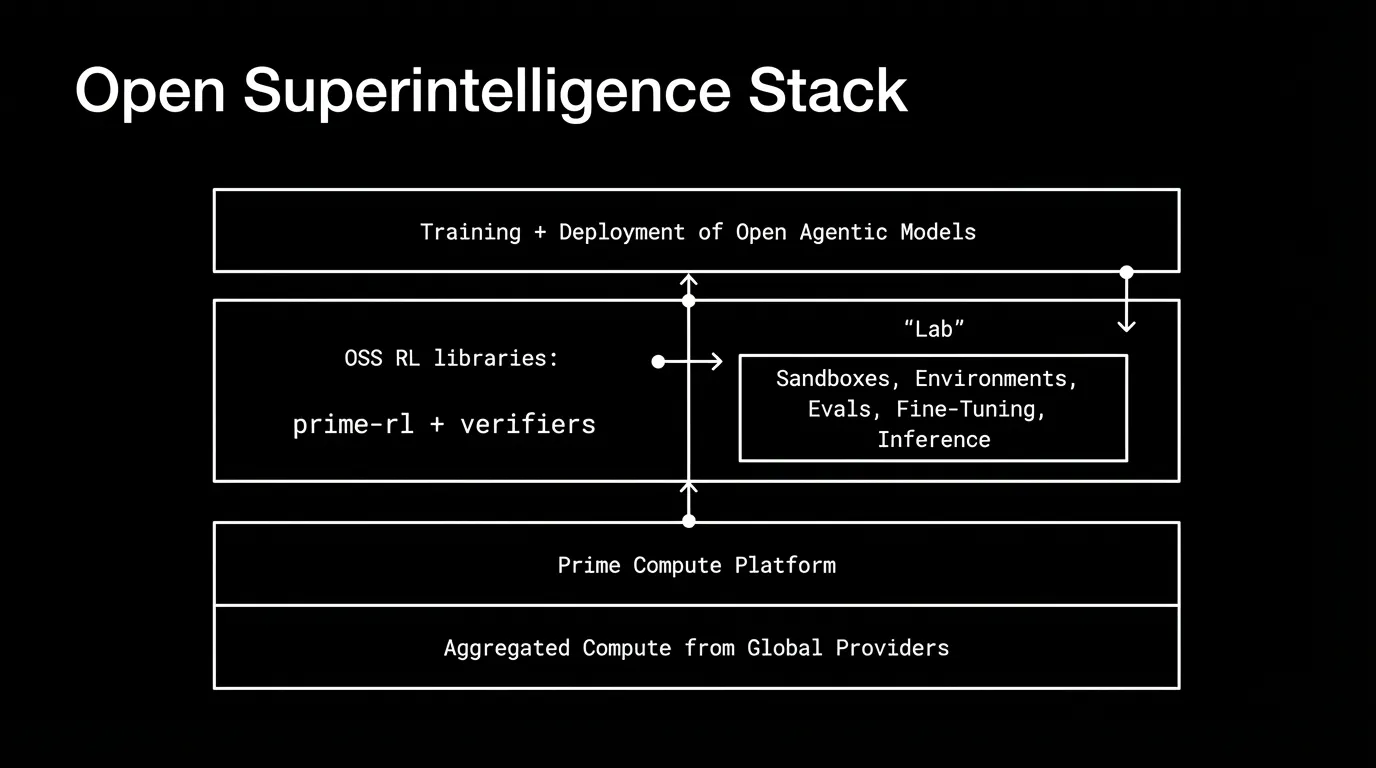

The power of this abstraction is its universality. Environments work for “research for the sake of research to advance our collective understanding of AI,” Brown explained, and equally for “taking small models and making them much better for custom purposes.” The same infrastructure serves both frontier research and practical fine-tuning.

From Research to Production

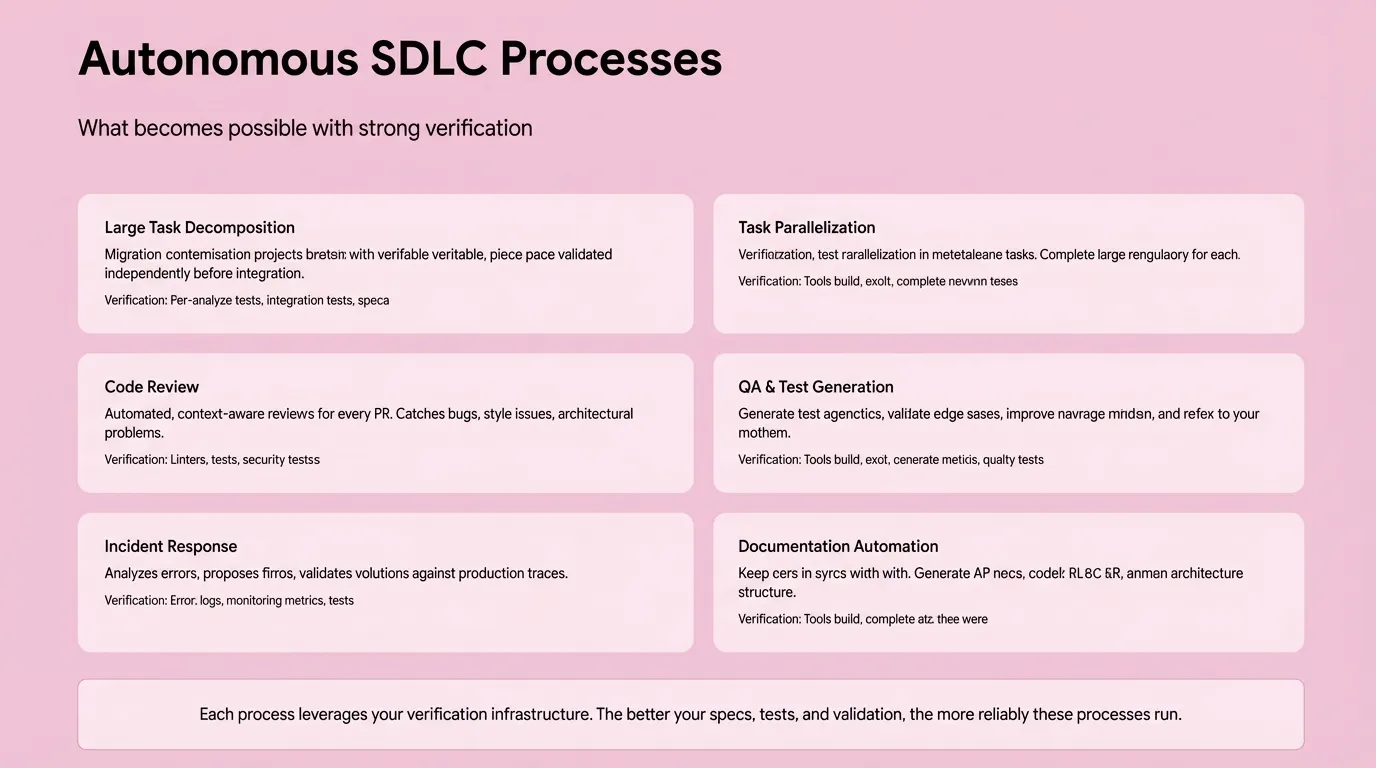

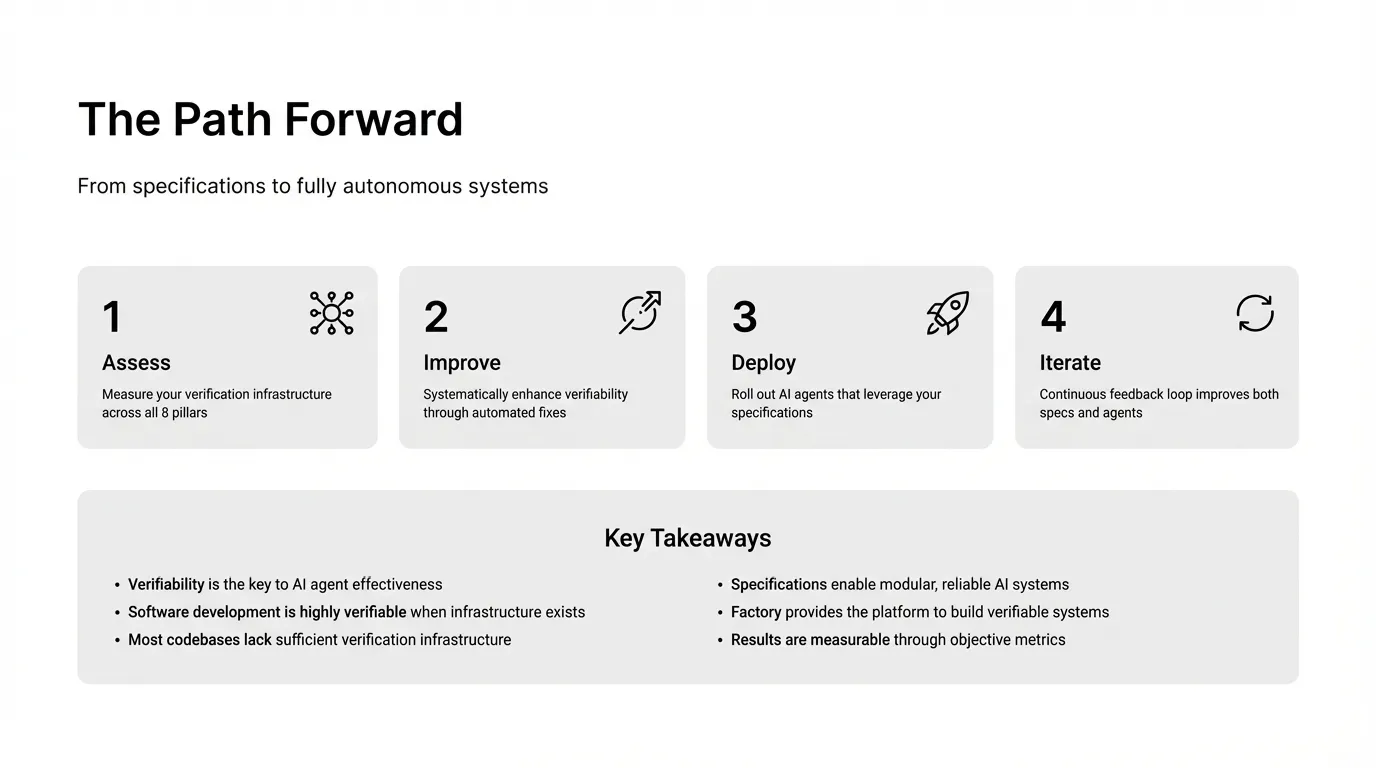

Reyes made the business case explicit. At Factory AI, they’ve built their entire agent-ready framework around verifiability. “Invest in the environment feedback loop,” he urged. “One opinionated engineer can change the velocity of the entire business.” The rate limiting step is “your organization’s validation criteria.”

Pash’s vision for Cline extends this to meta-automation. His team built an “RL environments factory” where subagents qualify tasks and generate verifiable environments from real-world coding work. “The bottleneck should shift from engineering to collecting quality tests,” he argued. He announced Cline-bench, their open-source benchmark that converts opt-in user data into training data, closing the loop from deployment back to training.

The New Unit of Everything

Environments are the fundamental unit for reasoning about AI systems. Web frameworks made app development accessible; now environment hubs and verification toolkits are “increasing the accessibility of doing AI research,” Brown observed. The vision: an “open superintelligent stack”—composable, shareable environments for collective progress.

Whether you’re building benchmarks, training with RL, or deploying production agents: think in environments. Define the starting state, specify the verifiable outcomes, let the rewards flow. Training, evaluation, and deployment unified into a single framework.