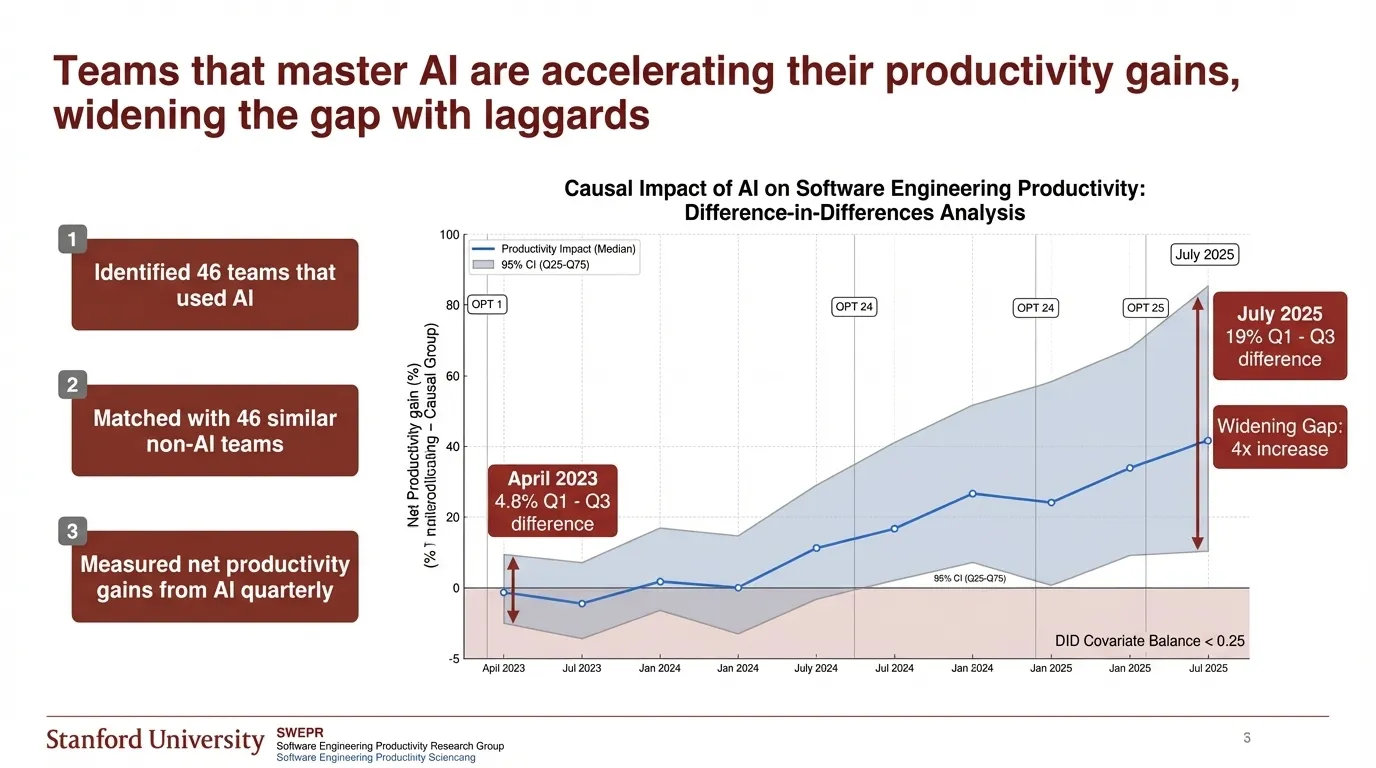

Stanford researcher Yegor Denisov-Blanch, who analyzed over 100,000 engineers across hundreds of companies as part of the Software Engineering Productivity Research Group, delivered the reality check: actual productivity looks nothing like the demos. And the gap between organizations effectively adopting AI and those lagging behind is widening.

“AI usage quality means more than quantity,” Denisov-Blanch emphasized. On greenfield projects, teams can deliver 35-40% productivity gains. But brownfield codebases and high-complexity work? Those gains plummet to 0-10%. If the codebase is a mess, the tools end up churning through and reworking code without actually refactoring. Code quality can go down.

The research revealed a “rich gets richer effect.” The gap between AI-using teams and non-AI teams widened from 4.8% to 19% over two years—a 4x increase. Teams that already maintained clean codebases, established good practices, and invested in systematic tooling are seeing outsized benefits.

Bloomberg’s Lei Zhang put it bluntly: “Vibe coding is where 2 engineers can create the tech debt of 50 engineers.” They have a massive codebase and 9,000+ engineers. AI tool usage “dropped really quickly once we moved back from greenfield.” They have a lot of brownfield.

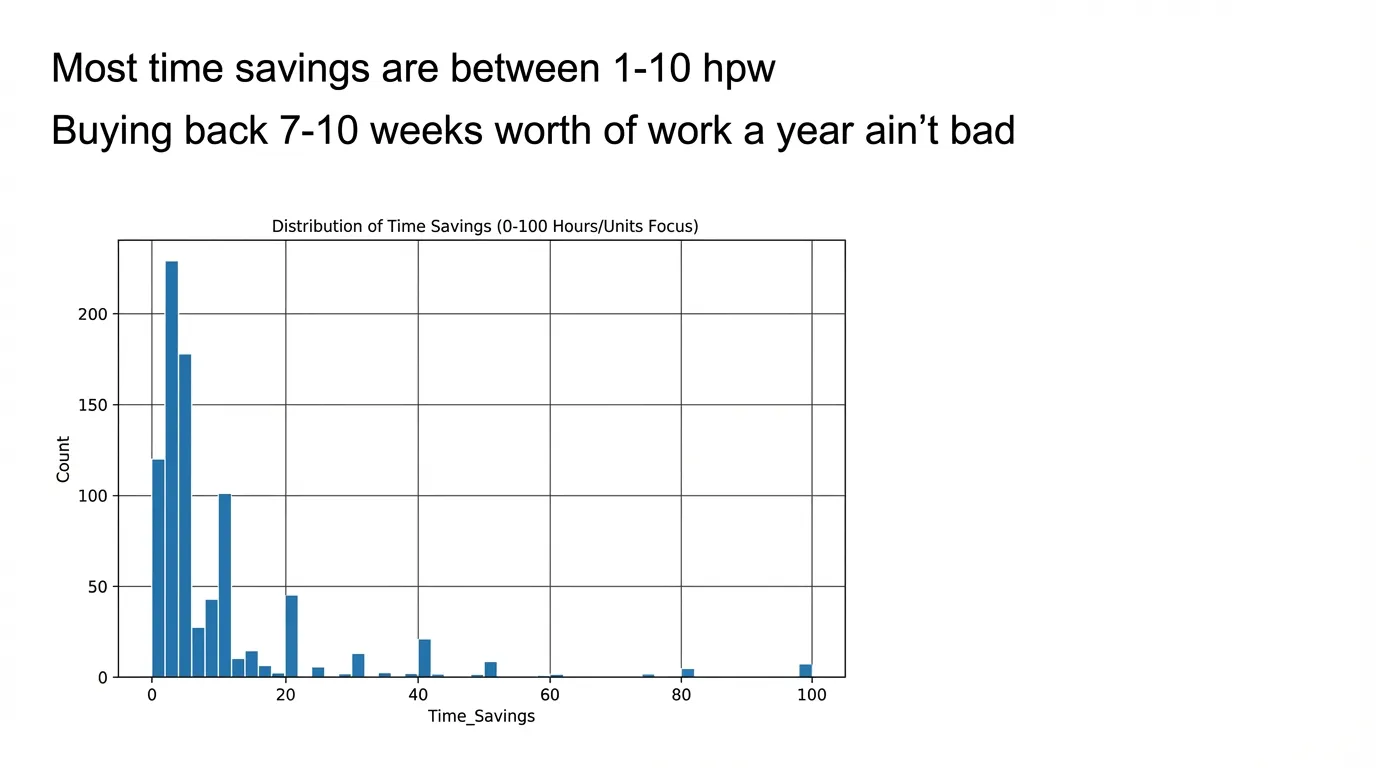

NLW’s survey of 3,500 use cases across eight impact categories revealed that coding is “further ahead” than other domains in delivering measurable ROI. This could be a biased sample—McKinsey’s parallel study found only 7% of organizations believe they’re fully at scale with AI development tools. The remaining 93% are stuck in “pilot purgatory.”

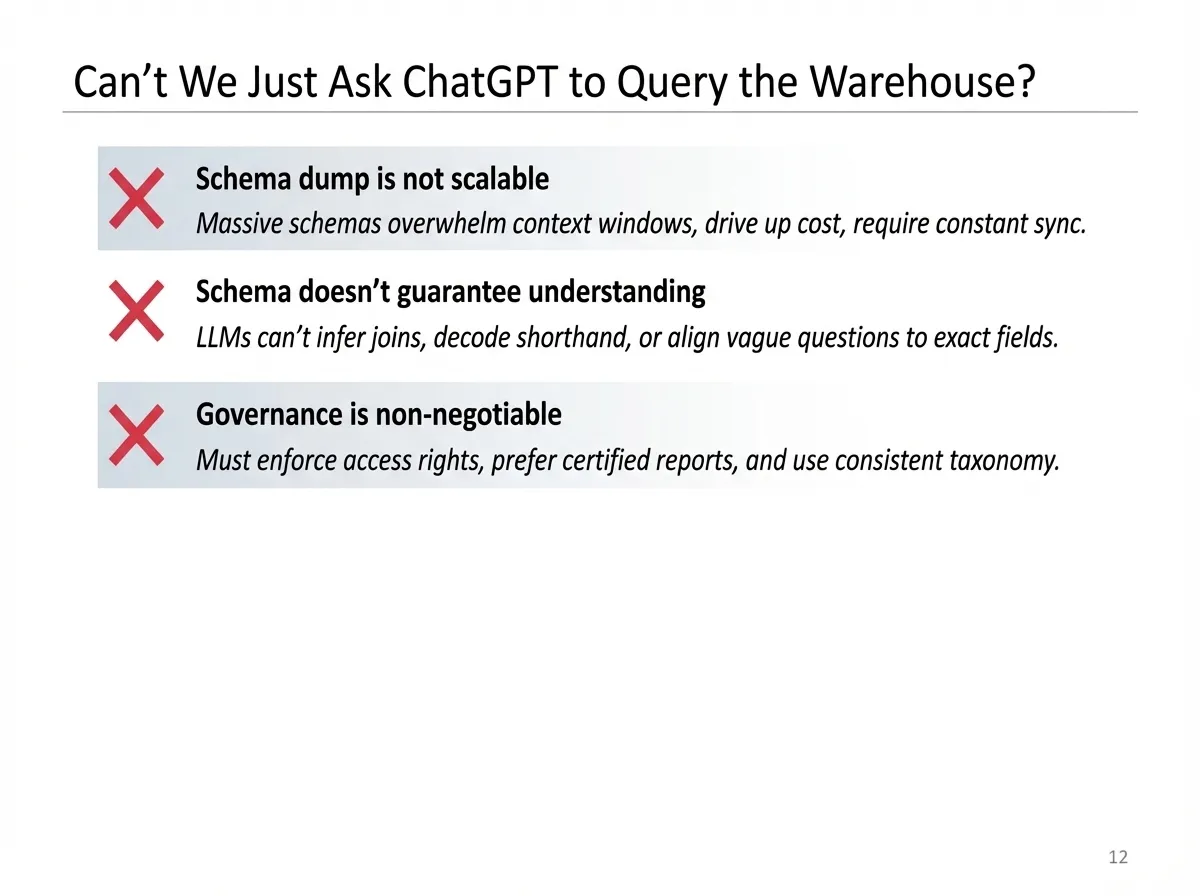

Asaf Bord from Northwestern Mutual brought enterprise reality into focus. “The gap between demo and production is so broad,” he stated plainly. Working in one of the world’s most risk-averse environments—an organization built on 40-50 year client relationships—Bord identified four barriers: unknown technology, messy real data, blind-trust bias, and budget impact concerns.

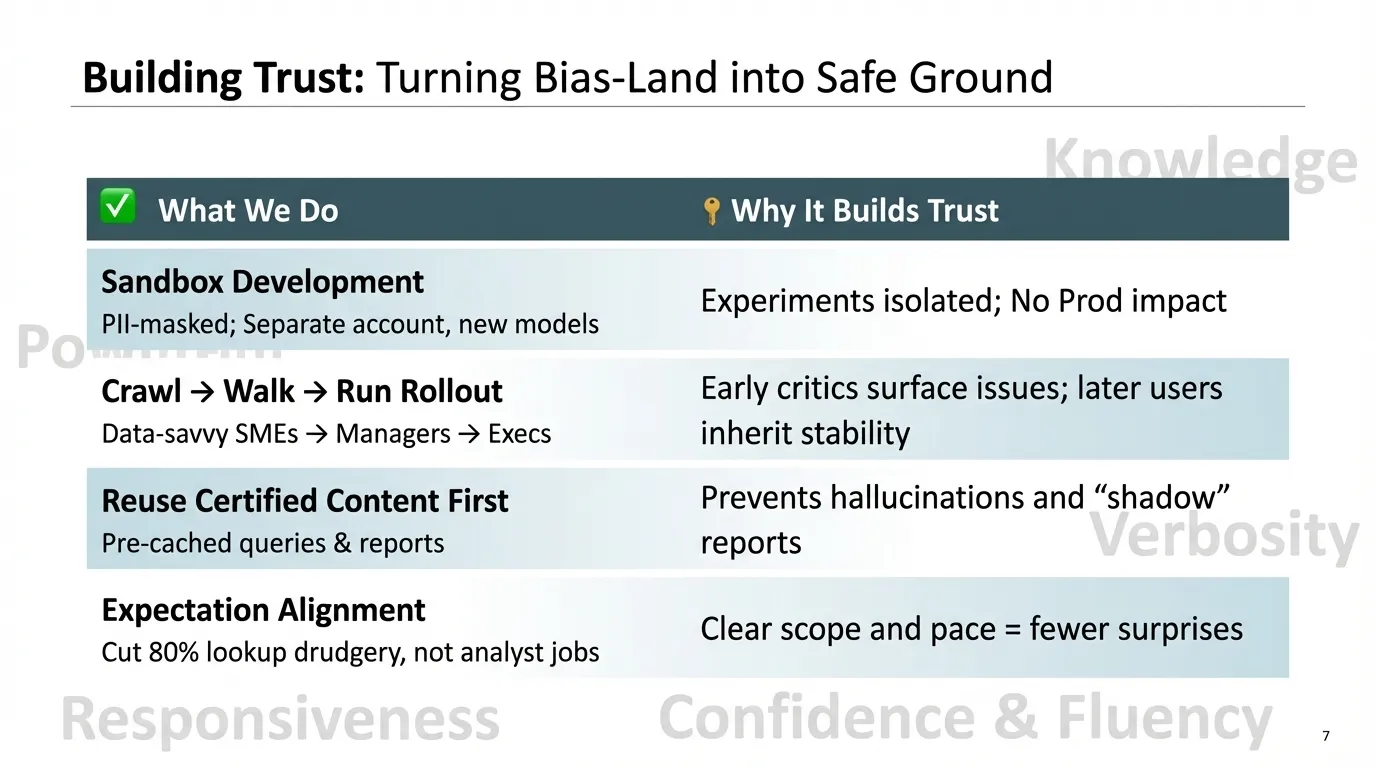

Bord’s strategy for building trust: sandbox development with PII-masked data, “crawl → walk → run” rollout starting with data-savvy SMEs, reusing certified content to prevent hallucinations, and expectation alignment—“cut 80% lookup drudgery, not analyst jobs.” Early critics surface issues; later users inherit stability.

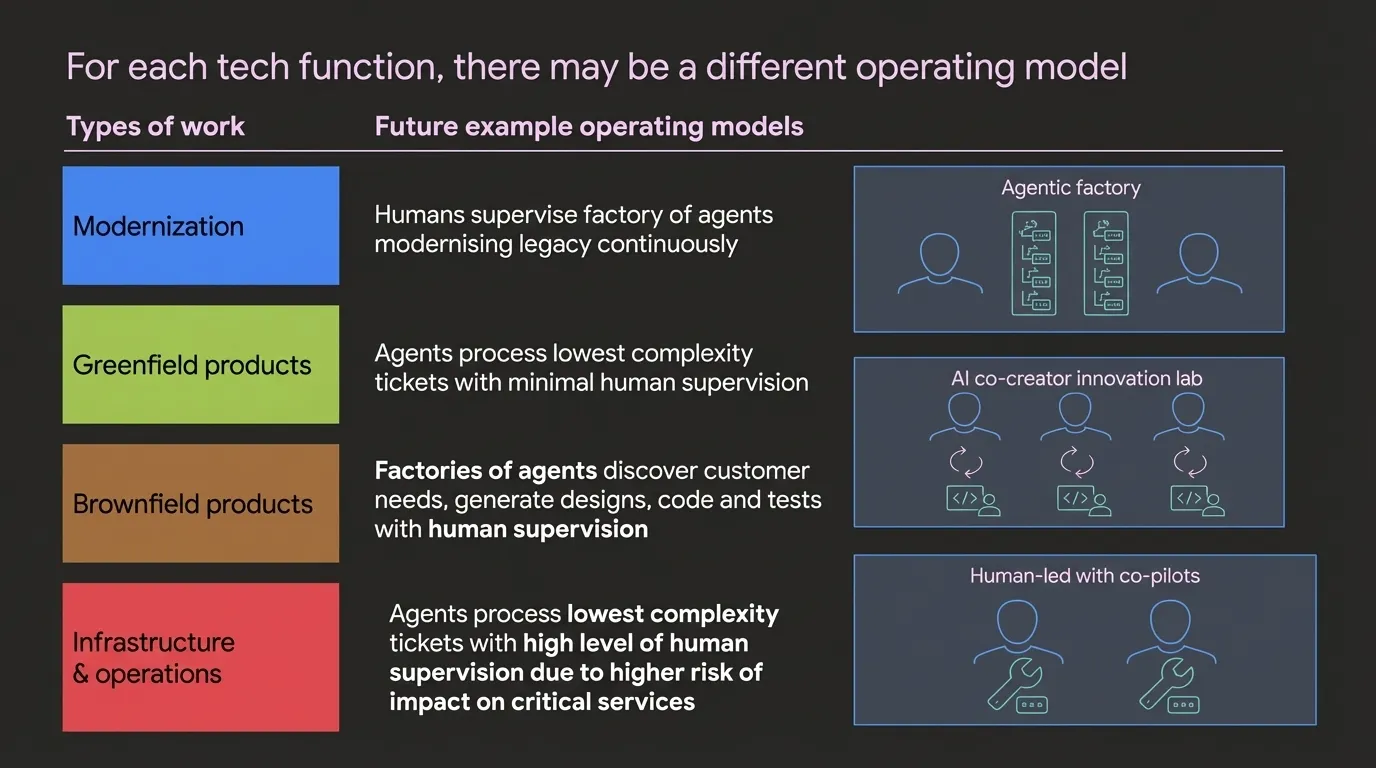

McKinsey (Martin Harrysson, Natasha Maniar) describes it as “post-Agile” methodologies. Different types of work require different human-agent operating models. Modernization can leverage “agentic factories” with agents working continuously under human supervision. Greenfield becomes an “AI co-creator innovation lab.” Brownfield and infrastructure work stays human-led with copilots—higher risk requires higher oversight.

The 35-40% gains are real—but only for organizations willing to get their houses in order first. The ROI is earned through discipline.